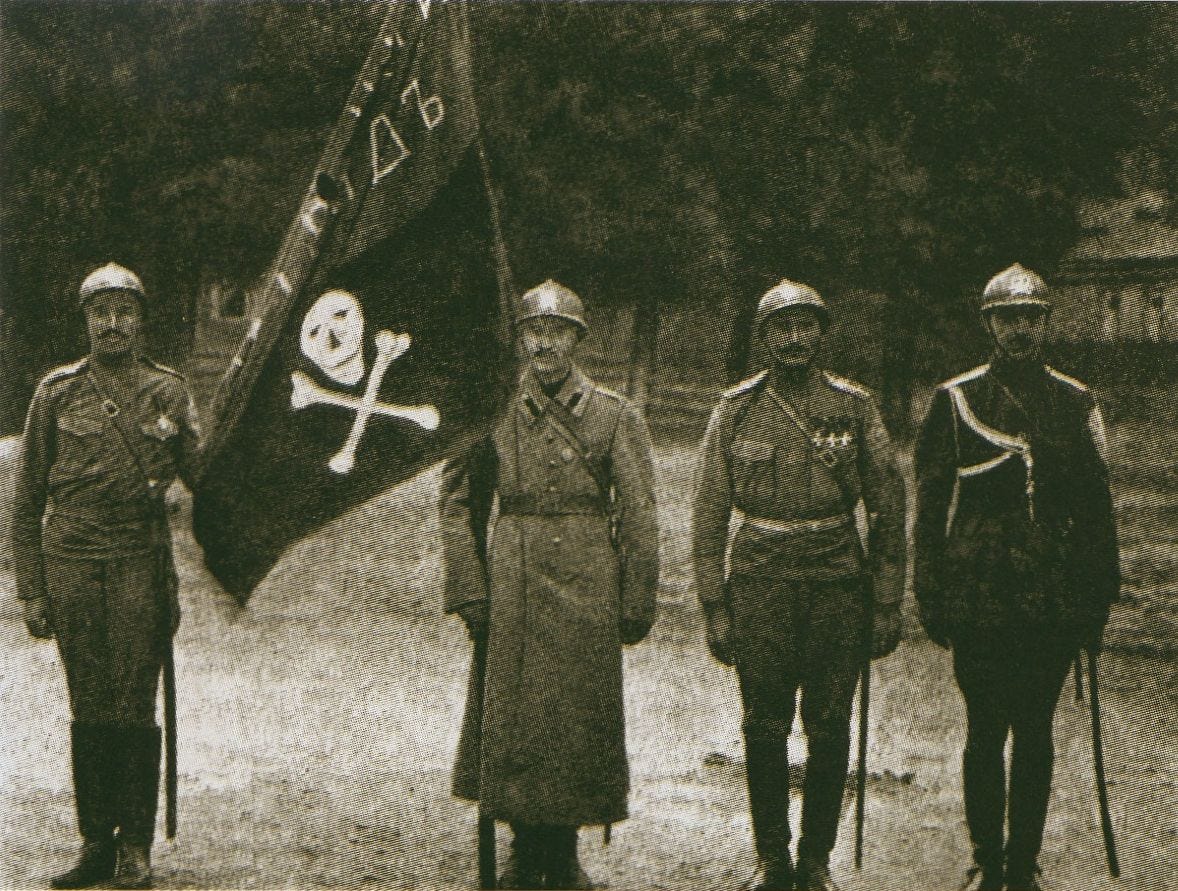

Anti-Bolshevik Soldiers during the Russian Civil War

In the 1960’s, the modern environmentalism movement emerged in the United States. The early environmentalists were an ideological faction linked by their dedication to preserving nature from human development including construction and pesticide. They were particularly concerned about protecting the lives of animals and preserving natural beauty, and projected a general skepticism about human intervention in natural systems. To this end, they fought engineering projects on major natural sites, attempted to protect animal habitats, and worried greatly about the consequences of overpopulation. They maintained these issues and these concerns throughout the decades: there was no reason for them to stop deeply caring about nature and the threats to nature did not disappear.

In the last few decades, the scientific community has presented the world with the near certainty of large negative consequences from human effects on the environment. Massive industrial and consumer emissions and deforestation have put humanity as a whole in a difficult crisis which warrants serious responses. Environmentalists have taken the lead on the response, and responding to global warming has largely been counted as an environmental issue, a consensus validation that environmental concerns matter and have always mattered.

While global warming is conceptually linked to the earlier environmentalism, the concerns are actually quite different. Global warming significantly endangers human life and human civilization, and there are very good reasons to care about it even if one does not care for animals or nature. Global warming presents such a significant danger in fact that if a solution were to destroy the Amazon rainforest rather than preserve it, there would be very good moral reasons to proceed with the destruction. But this is not how history has worked out.

Many of the trends that environmentalists have been concerned with have proven to be key contributing factors to a potential global catastrophe, which leads many to claim that they were right after all, and that everyone should be an environmentalist. However environmentalists never claimed that what they were actually concerned about was a future catastrophe affecting even urban human life, they claimed that what they were concerned about was the destruction of nature. Their moral project actually remains perfectly consistent if the situation was reversed they were attempting to protect the rainforest even though its destruction would save humanity. Despite the easy conflation, environmentalism and anti-global warming action are two entirely different moral causes concerned with distinct moral ends.

The merging of the environmental movement with the action against global warming has created many unfortunate mismatches of priorities. Many in the environmental movement argued for economic degrowth and restrictions on population in the 1960’s, when the world population was nearly half of what it is today and world gdp was a fifth. Were it not for global warming, which no one had firm science on at the time, there would be no question that such preferences were absurd, comparable to suggesting that America becoming a democracy would lead to anarchy. Environmentalists did not predict the future, their moral cause benefitted from a historical coincidence. And when that coincidence is mistaken as wisdom, other misjudgments can begin to cause harm.

Energy technologies that fight global warming such as nuclear have been opposed by environmentalists for just as long as they have been worried about overpopulation. That opposition has not changed now that global warming is a severe and pressing issue. In the context of climate action there has been an increased call for more local agriculture and less use of artificial chemicals, longstanding preferences of the environmental movement, even though such changes would actually increase carbon emissions. It now seems that we will survive global warming, although large questions remain as to how well. When the histories are written, it may not be that environmentalists were right all along.

—

Moral movements undergo many coincidences, some a fortunate turning of the world towards justice, sometimes a mistake of judgement giving undue authority. Early abolitionists look much better after the rest of the world joined them against slavery, which was a correct reassessment of their moral leadership; those who opposed the Russian Revolution look better after Stalin’s bloody years in power, but the ones who supported the Tsar’s absolute right to oppress his people are not made correct because their opponent turned out so bad.

The natural state of a human being is to believe that she is very very important. This is healthy: part of the dynamism of civilization comes from people deeply caring about everything they do, even if not all of it turns out as important as was believed. But is is also necessarily on average false.

Historians believe that history is incredibly valuable for civilization, and that history is not given its proper due. Economists believe that more people should listen to economists. When historical circumstances change what usually follows is a premature claim of prior preferences validated rather than a sober examination of new evidence. The extraordinary move is not to be right beforehand, but to admit when one has been shown to be wrong.

In order to not fall into this persistent trap, we need to be careful about our own self-criticism and examination. If a particular event seems to validate our previous prediction and outlook, and show just how important we are, that may be so. But if every event seems to do this, and every piece of contradicting evidence can be dismissed as against the trend, that should be a cause for deep suspicion.

Living morally means having concern for the world outside of yourself, not just concern that the world validates your concerns. To actually respond to history by admitting you were wrong, by humbly changing career or expertise, and by committing to aiding those who you fought is a vastly underrated virtue.

—

AI Risk is a controversial topic. There are many extremely smart well-informed people who believe that significantly fast advances in artificial intelligence may end up producing hyperintelligent agents which have the power to significantly harm or destroy human civilization. There are many who believe that this is ridiculous and far fetched.

A close examination of the possibilities and actual technology of AI reveals that while the systems of today are nowhere near an imminent apocalypse, the potential may well be there. To the credit of those worried about AI risk, largely those within Effective Altruism, pandemics were also the subject of concern and preparation before COVID. I have become much more worried about the dangers of Artificial Intelligence after initially dismissing it.

Effective Altruism is a recent movement prominent in a very specific social slice of the modern west. It is deeply suspicious of emotional and cognitive biases, arguing that moral actions should be based on a neutral assessment of which actions are likely to do the most good. This means prioritizing mosquito nets for Sub-Saharan Africa over funding the arts or combatting inequality in the West. It means devoting funds to preventing engineered pandemics and nuclear war. And increasingly it also means funding research on AI and how to deal with AI risk.

While EA is far more consistent about self-criticism than almost any moral movement out there, it is not immune to biases. The EA population continues to be largely white, male, well-off, and quantitative in outlook, which may blind them to real possibilities for the greater good. Honest self-criticism means interrogating these biases, and especially being suspicious of arguments that seem to validate pre-existing preferences and interests. Accounts of the world that satisfy the EA view are suspicious, accounts that surprise and upset are worth celebrating. For example, a frequent accusation is that EA looks very little at radical changes to the financial and political make-up of the world because EAs themselves are quite comfortable and in outlook tend to be apolitical. This is a fairly strong case, but can be countered with the strong case that radical changes to society as a whole have on balance a very uneven track record and low-cost interventions focusing on public health produce some of the same benefits with less risk. Preexisting preferences are not disqualifying, they simply need to be responded to with serious thought and argument.

A view of the world that centers AI risk as one of the most important issues is extremely validating to the longstanding concerns and interest of Effective Altruists. Compared to the general population, EA’s tend to be more interested in both science fiction and computers. Enthusiast communities related to science fiction and computers have been fascinated by AI since the post-war era, long before AI risk was really contemplated, and a substantial number of have wanted to devote their career and lives to it as a deeply interesting and important area of research. The community that would eventually become EA has wanted to work on AI long before AI Risk or even Effective Altruism. And work related to AI particularly requires the skills of programmers and software engineers, an overrepresented career path among Effective Altruists. Deep interest in AI risk started about a decade before the Deep Learning boom made AI a mainstream concern, seemingly fulfilling the prophecy that those concerned were ahead of the curve. But this was another coincidence, as no one predicted the takeoff would happen when it did.

The potential responses to AI are perhaps even more suspect. Many EA proposals are concrete and direct: this funding will buy this amount of medicine, which will save this amount of lives. AI risk is much murkier, simply calling for more funding and research. The research does hold promise, but much of the response to the proposition “if we build an artificial general intelligence there is a good chance it will destroy humanity” seems to be “let’s build artificial general intelligence as fast as possible.”

OpenAI and DeepMind are both considered to be key funding targets for Effective Altruists concerned about AI risk, because both are cutting edge labs that have AI safety teams. However these two organizations are also likely the closest of anyone to creating world-destroying AI. This is justified with the possibility that if someone concerned with safety doesn’t make hyperintelligent AI first, someone not concerned with safety will instead. This possibility is real, but not air tight. For example there are no visible projects by the United States or Chinese governments to create hyperintelligent AI, suggesting that the race aspect is overstated. Furthermore, there are many scenarios where even supposedly safe hyperintelligent AI research allows for bad or simply misguided actors to build upon that research to create unsafe AI. If DeepMind and OpenAI disappeared tomorrow, it is not at all clear that this would mean that world-destroying AI would be significantly more likely to be created.

The case that the best way to help humanity is to fund AI research looks highly suspect when the people making the case already wanted to spend their time researching AI prior aside from ethical demands. The model of the world presented by AI Risk maximalists validates the lives of intelligent, ambitious, secular, sci-fi interested computer programmer to such an almost ludicrous extent. Many of the people making the argument are arguing the point, and quite skillfully, but also are arguing that they may be the most important and moral people in the history of the universe. This would be quite the coincidence.

None of this substantially engages with the positive, objective arguments that we should be deeply concerned with AI risk, which despite my misgivings I’ve still found quite convincing. But I worry that the pre-moral enthusiasm for AI and computers has the potential to distort the incentives around a very serious danger.

My hope is that AI will turn out not to be as dangerous as experts fear, and that only good things will come from this technology. But if AI is inherently dangerous, if its risks vastly outweigh its potential benefits, then I hope that the field will die. I hope we will stop researching it and like any number of technologies it will simply stagnate from lack of interest. This would be difficult for me and all like me who are deeply interested in the research and want the potential to do good for the world using our skills. But that difficulty is at least a sign that this might be the moral path path for history to take.